In today’s interconnected digital landscape, maintaining robust security measures is paramount for businesses of all sizes. Threat actor’s constantly evolve their tactics, necessitating proactive approaches to identify and mitigate potential risks. Microsoft Sentinel, coupled with Logic Apps offers a powerful solution to enhance security operations, enabled organizations to swiftly detect and respond to threats.

In this blog post, we’ll explore how to leverage Logic Apps to extract IP addresses from security events in Microsoft Sentinel and subsequently retrieve abuse scores from IPDB services. This process empowers security teams to gain deeper insights into potential threats and take decisive action to safeguard their systems.

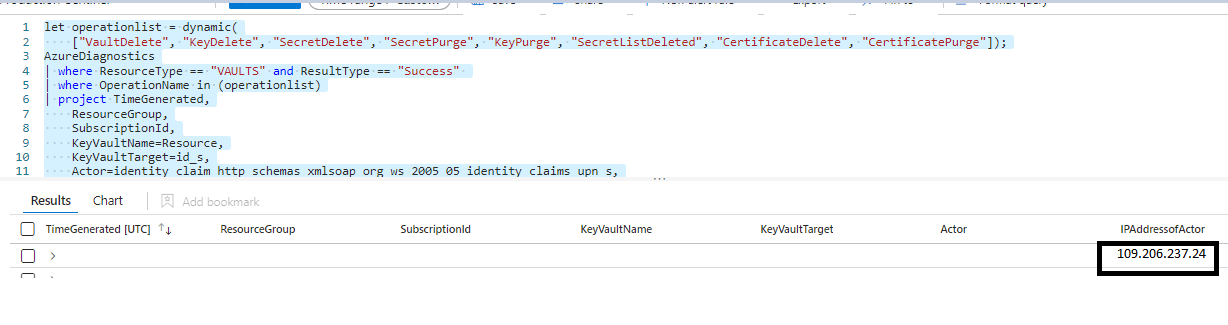

Step 1: KQL Query

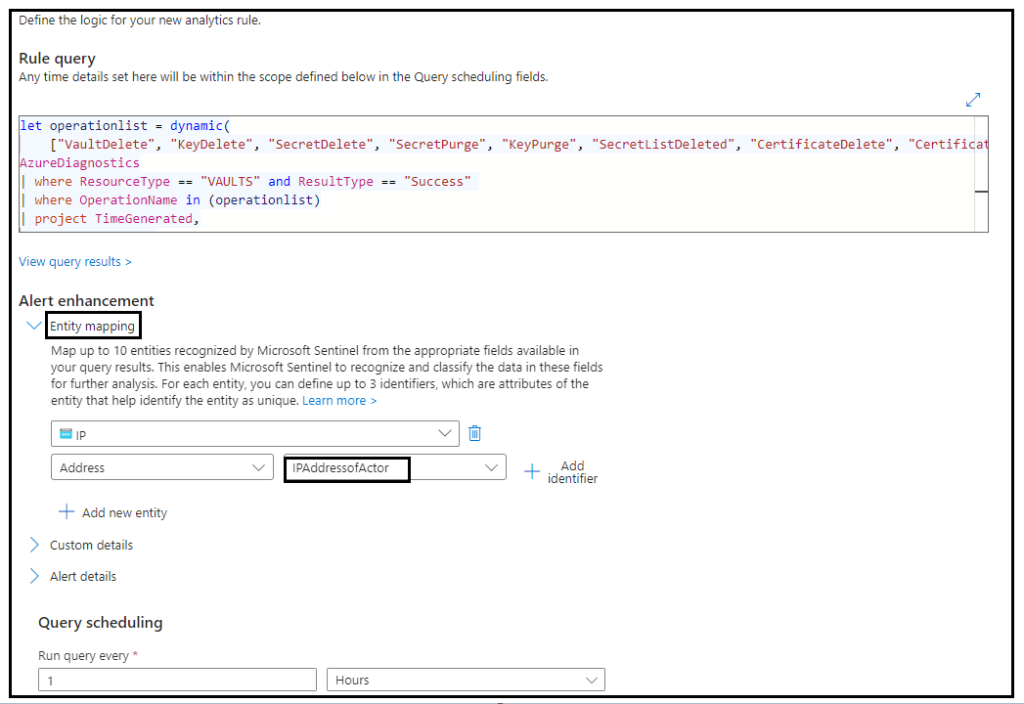

Let’s take an example where we have an analytics rule with a KQL query defined to raise an incident whenever any delete or purge activity occurs within Azure Key Vault.

let operationlist = dynamic(

["VaultDelete", "KeyDelete", "SecretDelete", "SecretPurge", "KeyPurge", "SecretListDeleted", "CertificateDelete", "CertificatePurge"]);

AzureDiagnostics

| where ResourceType == "VAULTS" and ResultType == "Success"

| where OperationName in (operationlist)

| project TimeGenerated,

ResourceGroup,

SubscriptionId,

KeyVaultName=Resource,

KeyVaultTarget=id_s,

Actor=identity_claim_http_schemas_xmlsoap_org_ws_2005_05_identity_claims_upn_s,

IPAddressofActor=CallerIPAddress,

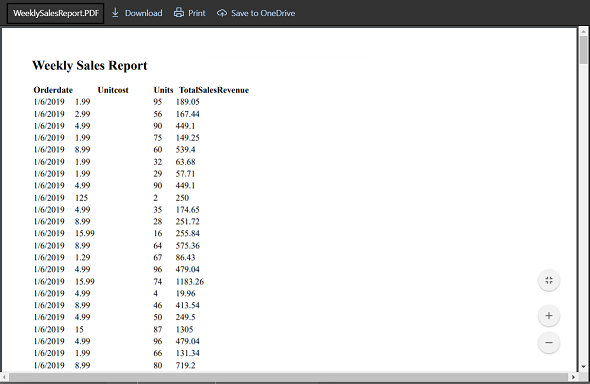

OperationNameWhen i run this query, here is how the output looks like.

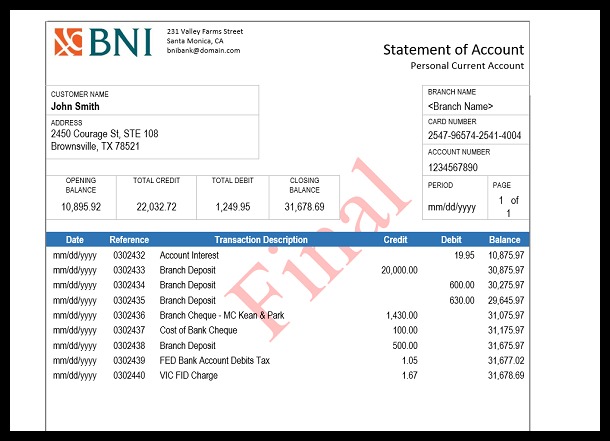

For the sake of data privacy, i have masked most of the information and purposely shown an abused IP address.

Now that we know our KQL query is able to detect deletion and purges along with IP Address of actor, lets put this all in an analytics rule.

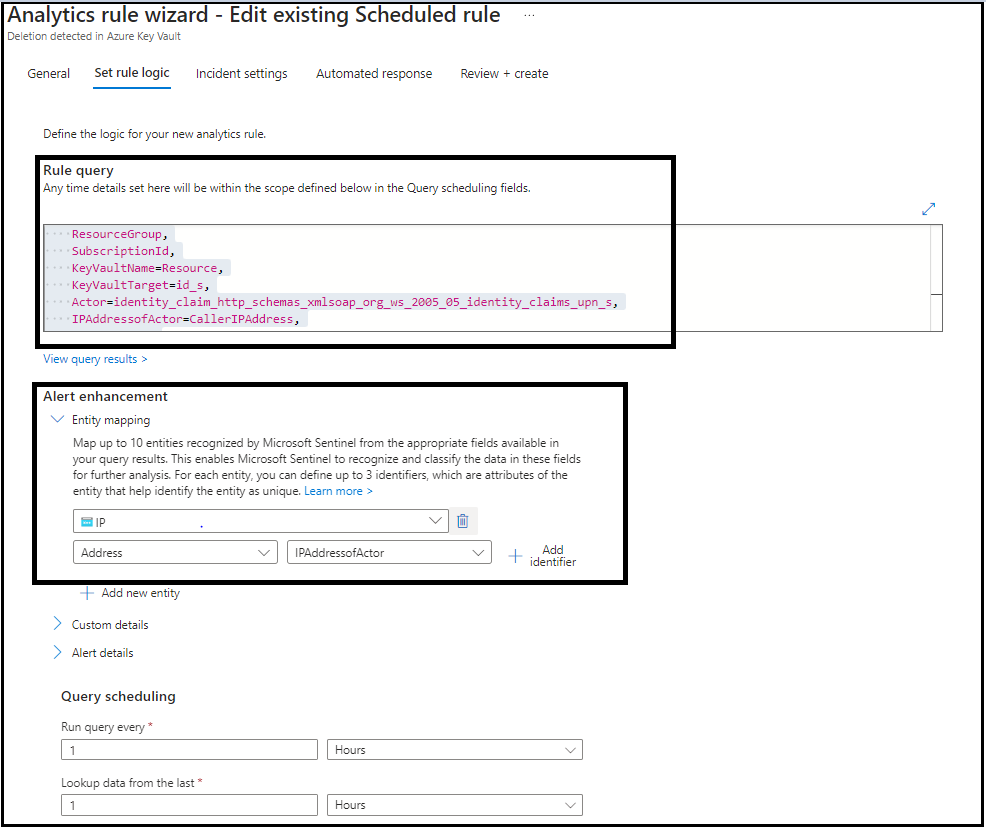

Step 2: Setting up Analytics rule

Here we set up a scheduled query through analytics to run once every hour and detect if any deletion activity is carried out across all key vaults in Azure. This query is also responsible for triggering the logic app that will calculate the abuse score of the IP address via which deletion was carried out.

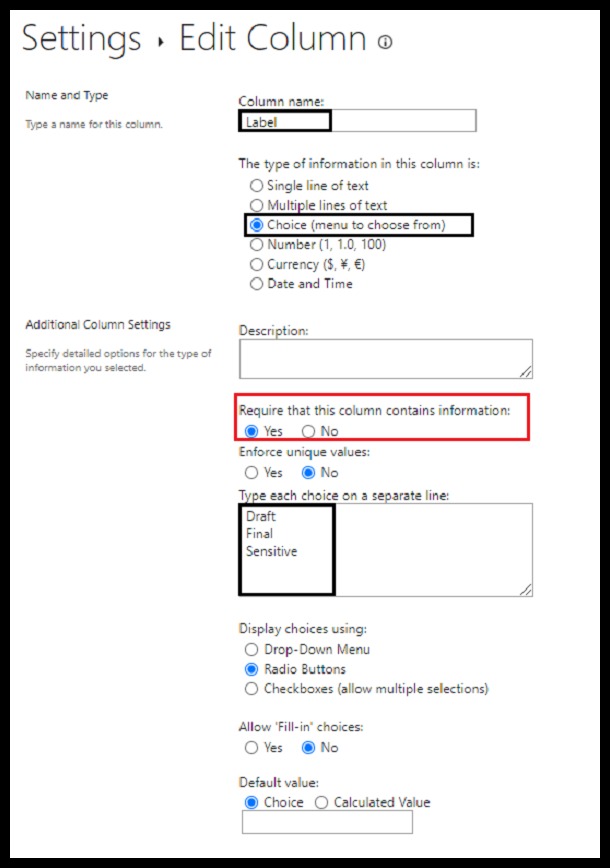

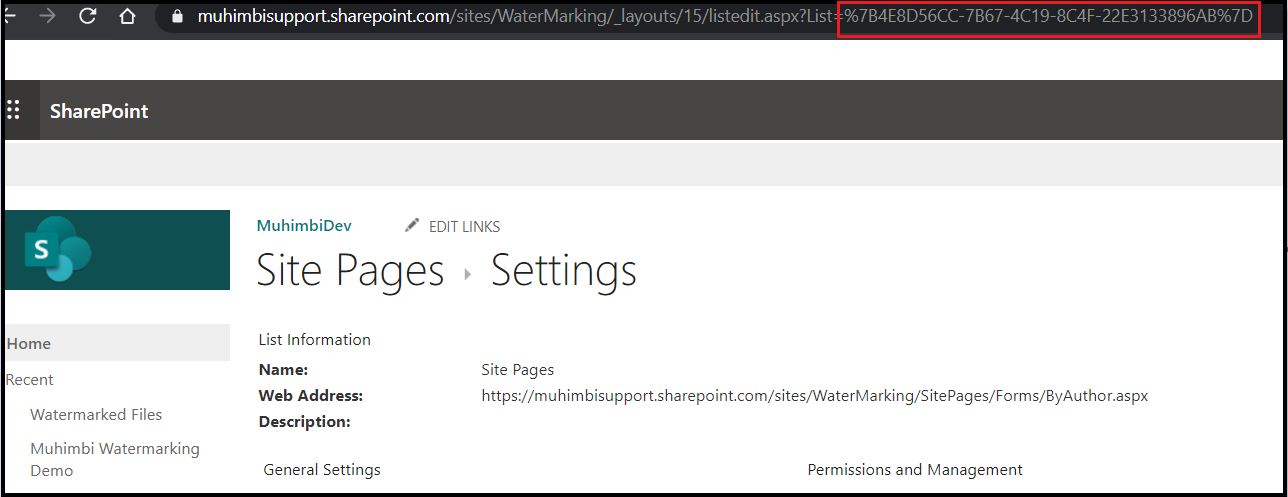

The KQL query that we created above exposes a column IPAddressofActor. We need to map this column to an entity as shown below.

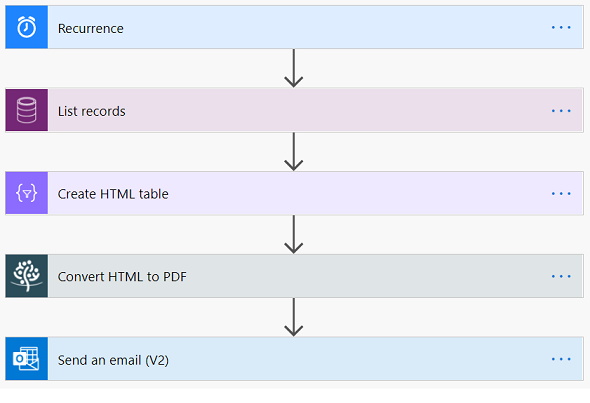

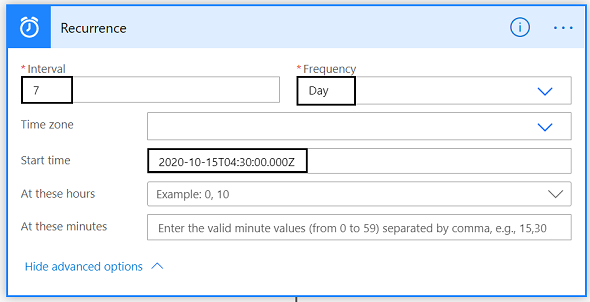

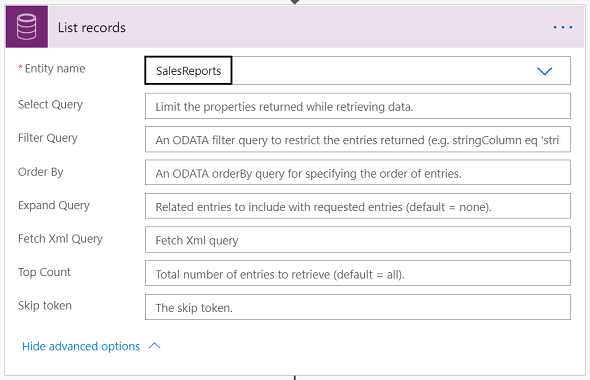

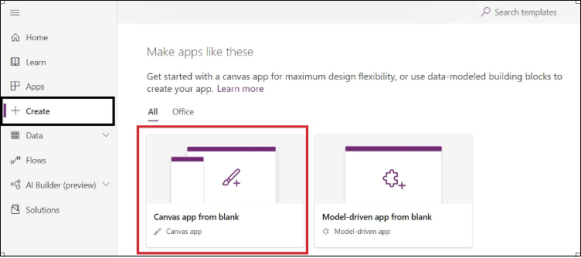

Step 3: Logic Apps

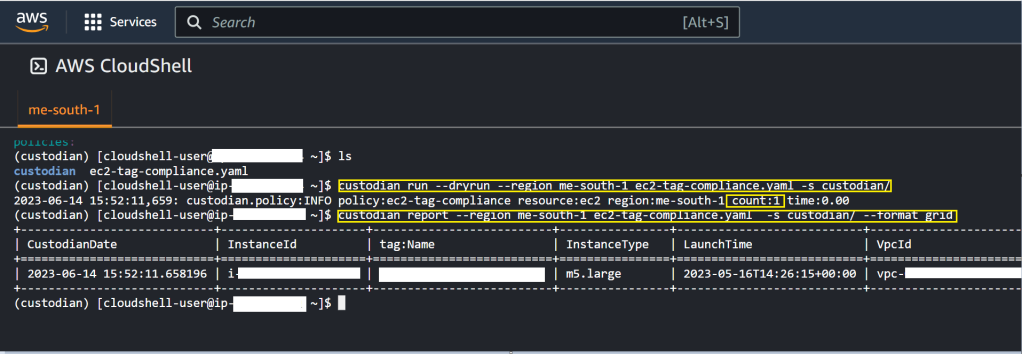

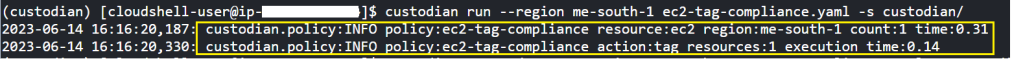

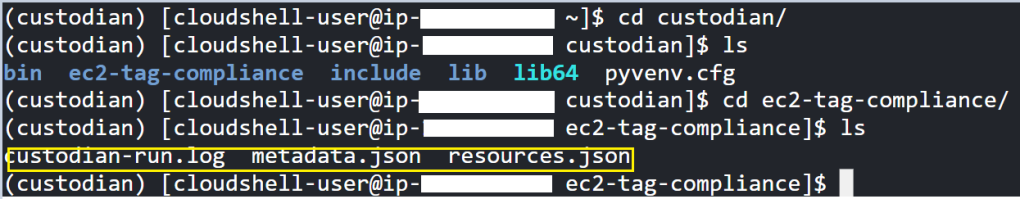

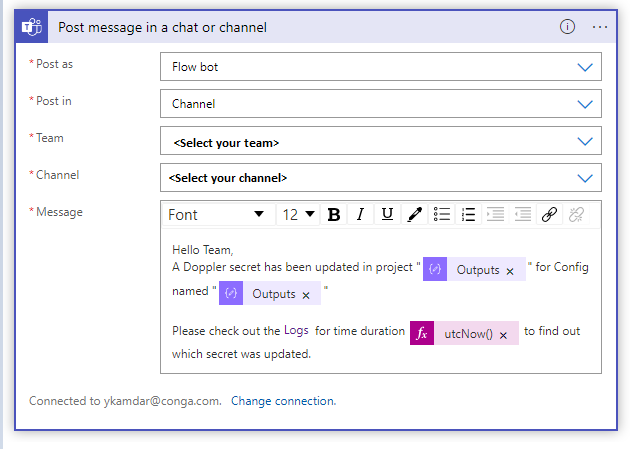

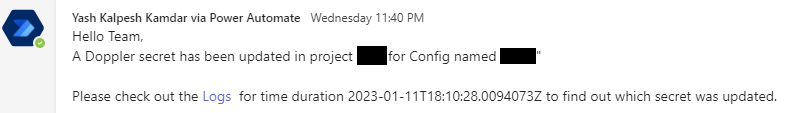

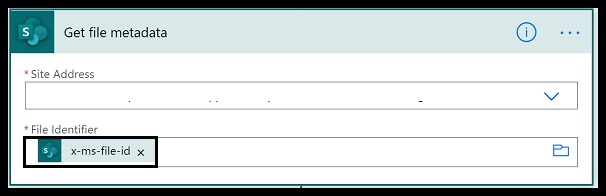

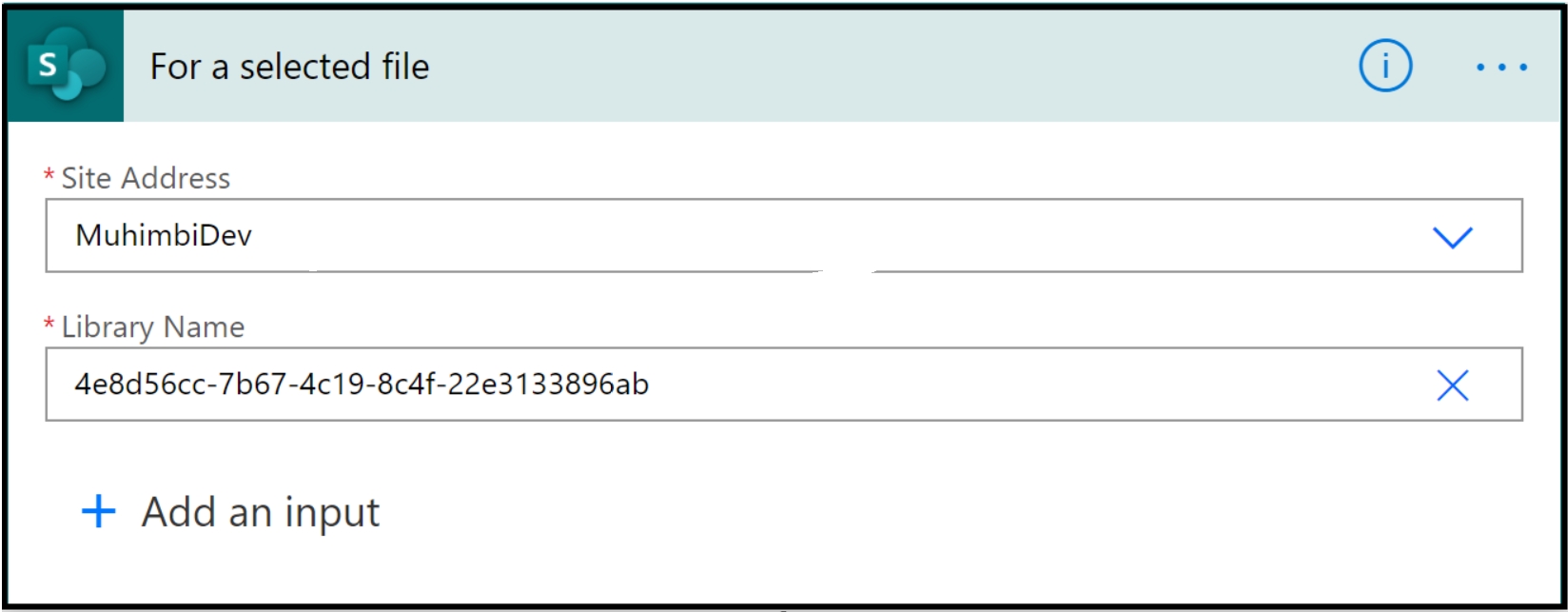

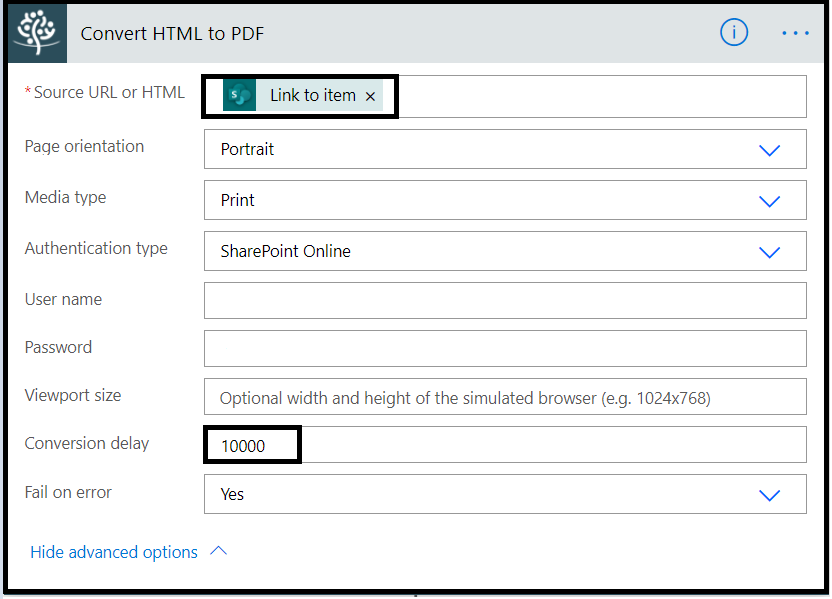

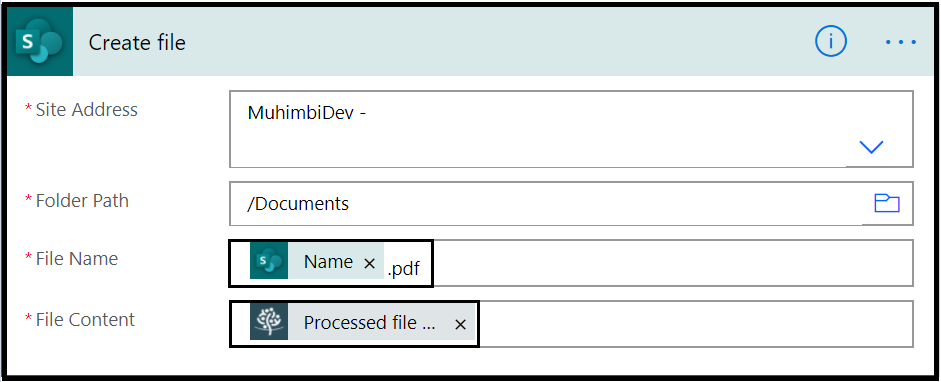

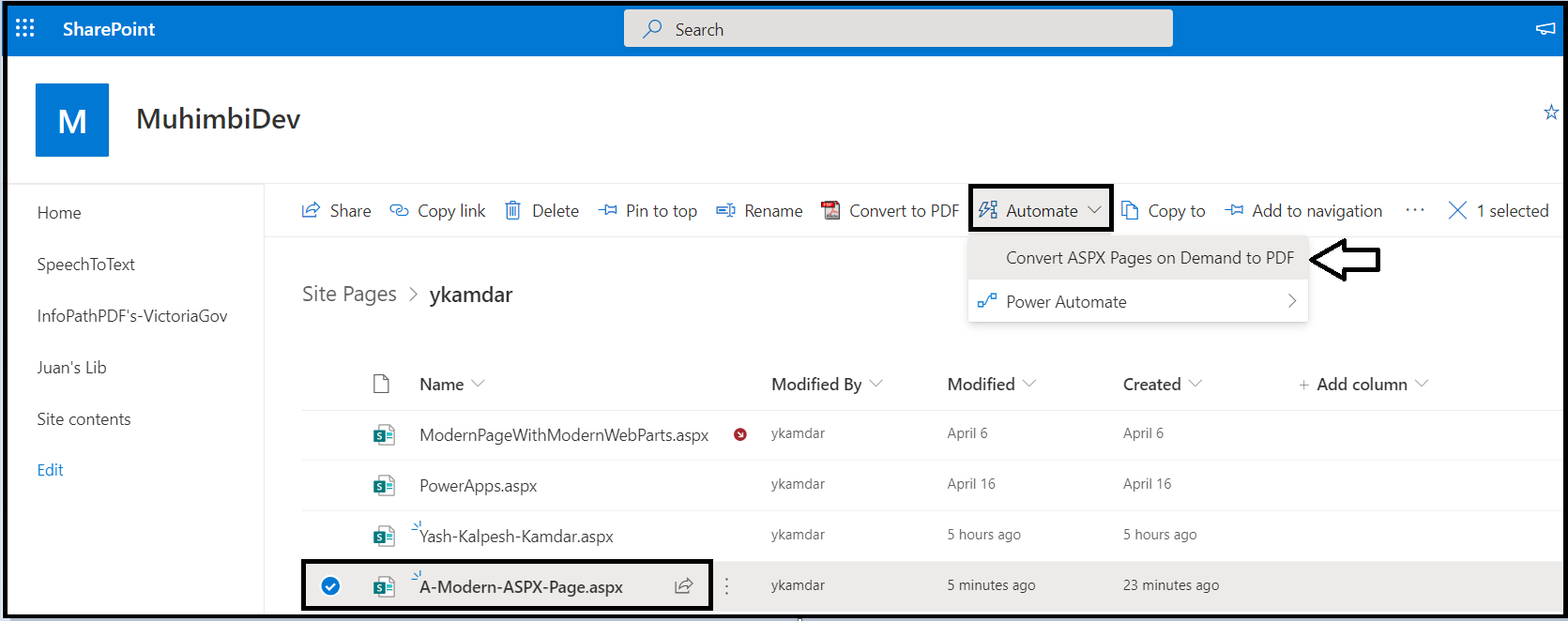

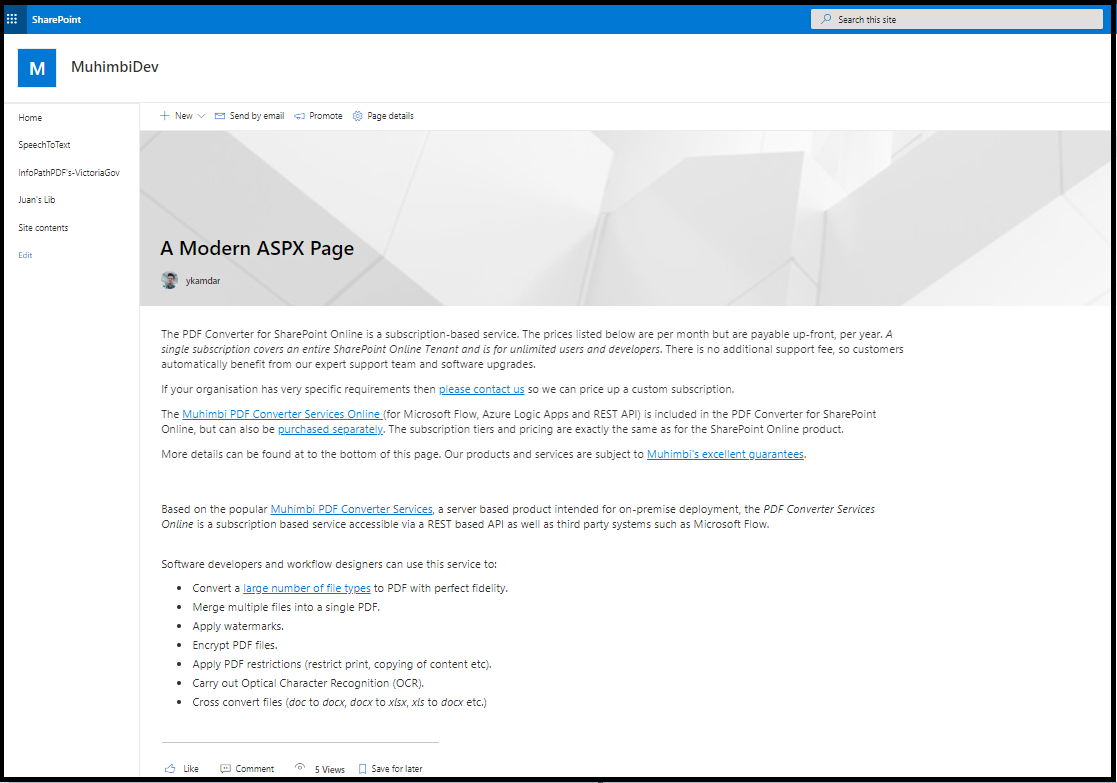

Let us configure a logic app that automatically gets triggered when our scheduled query rule performs a detection.

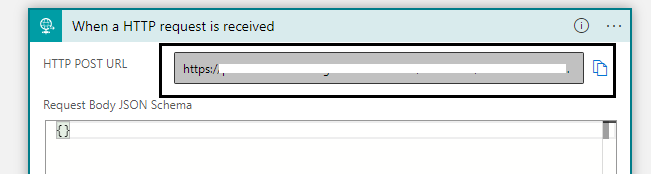

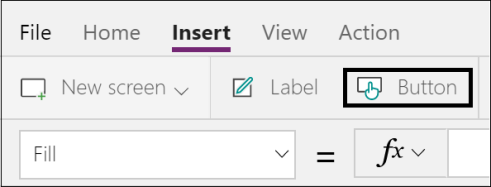

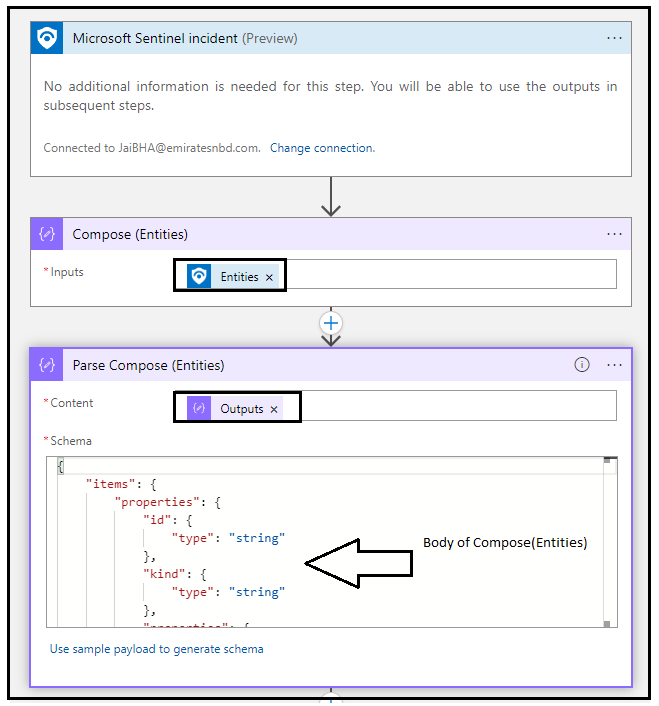

The first action is the Trigger. Basically, this tells the logic app to trigger whenever a Microsoft Sentinel Incident is created. You can learn more about it here

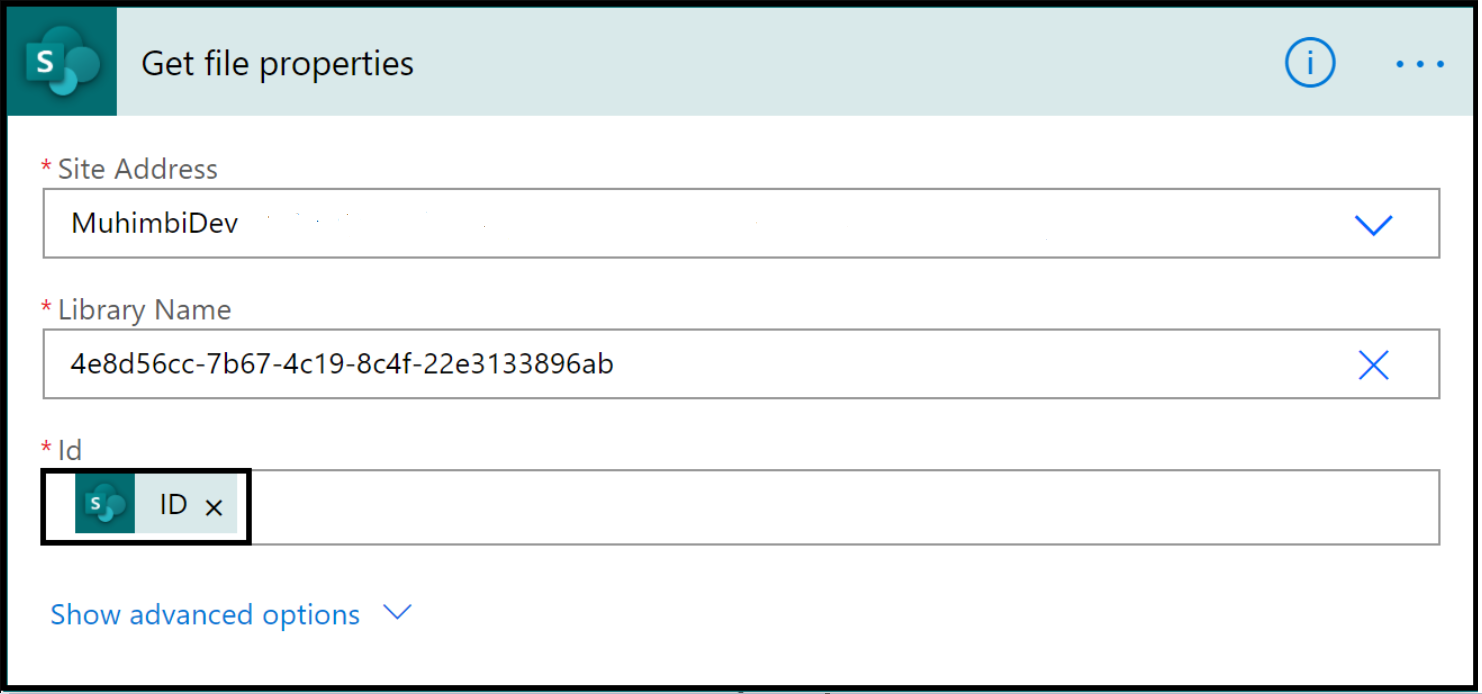

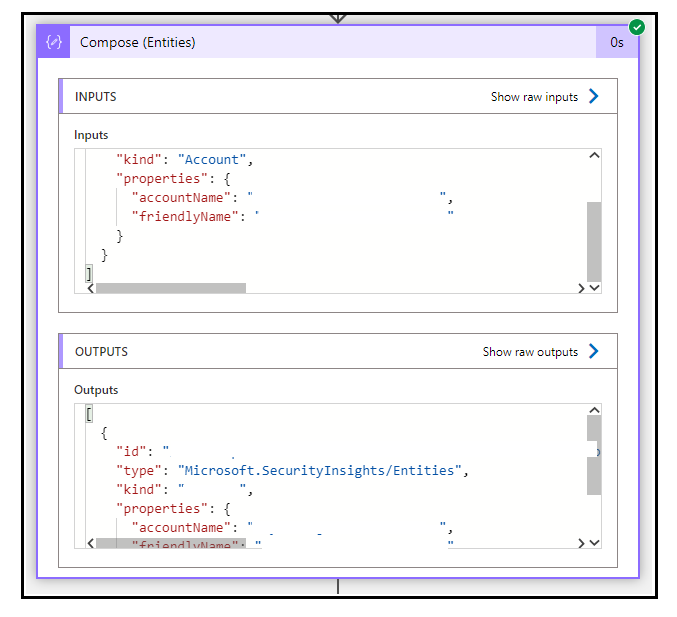

In the next action Compose(Entities), we use the compose action to grab the entities. You can see all the entities mapped to the incident become available as output of this action.

The action, Parse Compose(Entities) is used to parse the JSON output made available from Compose(Entities) and make the value available as a variable.

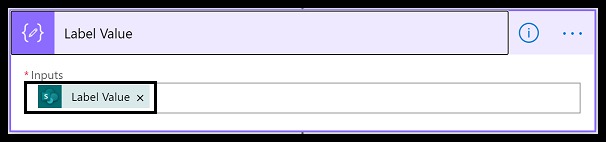

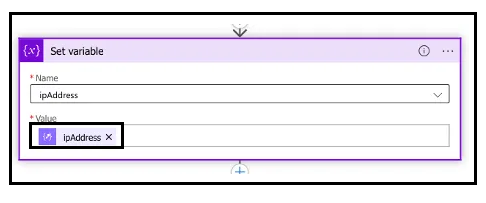

In the action, Set Variable, we set the variable ipAddress made available from the Parse Compose(Entities) action.

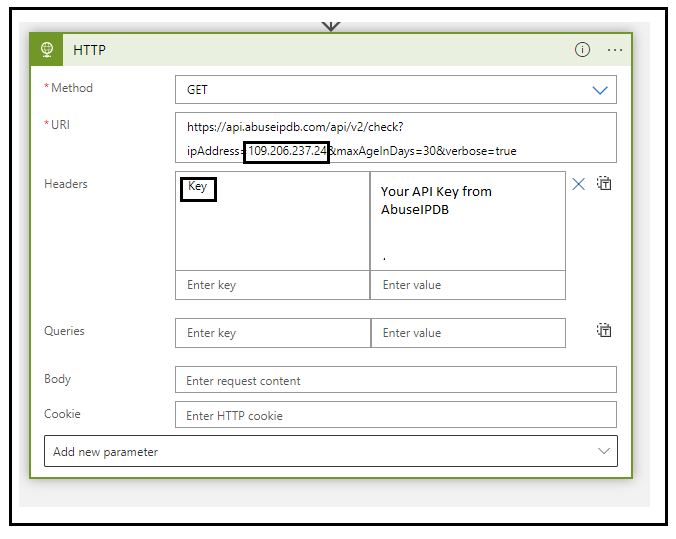

This is the final action, we configure an HTTP trigger and call the AbuseIPDB API to get abuse report and abuse score.

URI -

https://api.abuseipdb.com/api/v2/check?ipAddress=<ip_Address>&maxAgeInDays=30&verbose=true

Method -

Get

Headers -

KeyIn the below action, i have purposely hardcoded the ipAddress to show you the IP in question, you can pass the output of Set Variable action

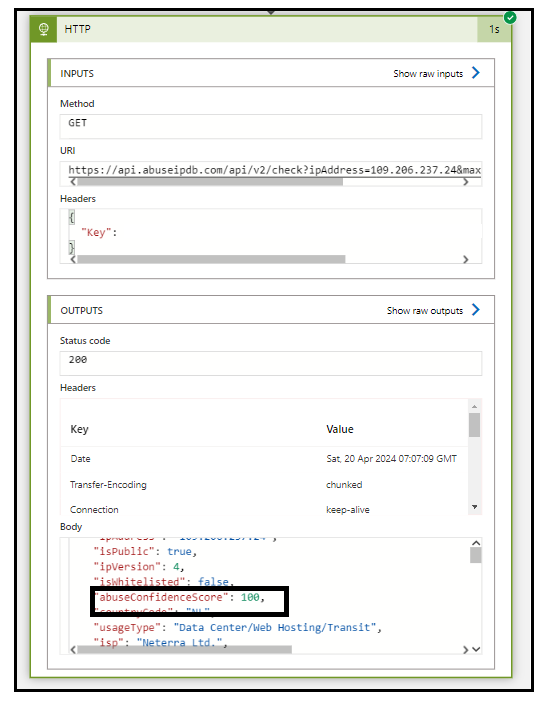

Here is how the output of the HTTP action looks like in the logic apps run history.

In the Body, you can see there is a parameter called “abuseConfidenceScore“. The abuse confidence score is the calculation evaluation of how abuse the IP is based on the users that reported it.

That’s it, now you can modify the logic app as per your own will to take a decision in the next step. You can use a Send Email action to send an email to your stake holder (Cybersecurity team) and let them know about this abusive IP that was involved in deletion and then they can block this IP address.

Step 4: Connecting it all together

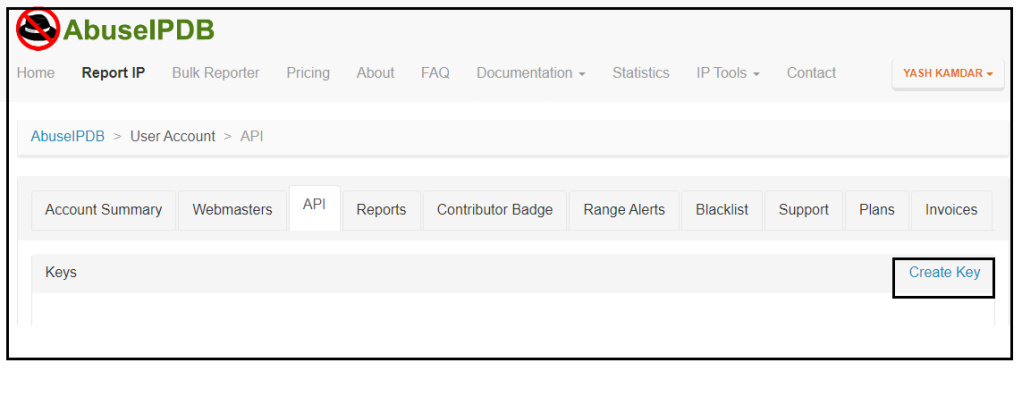

Note that you need to create an account in AbuseIPDB to get an API key which then needs to be used in HTTP action.

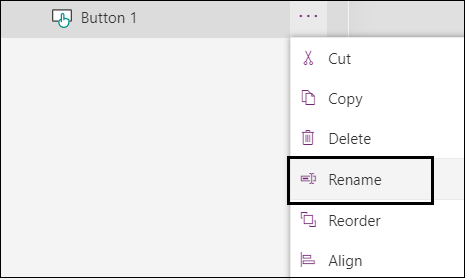

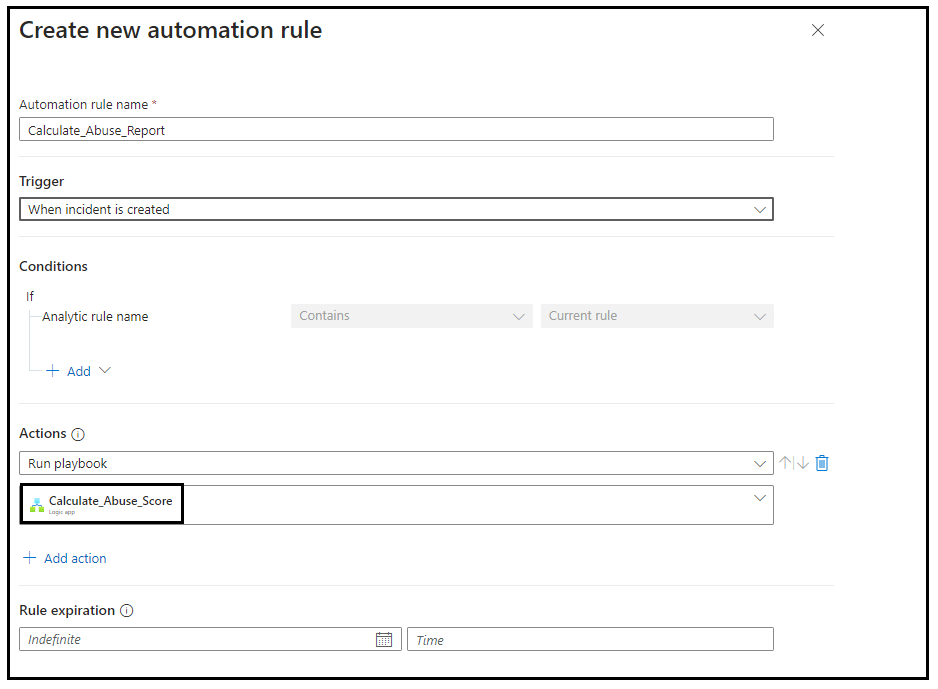

Once the Logic App is configured, you need to go back to your analytics detection rule in Sentinel and perform once last step to integrate your logic app with the rule as below.

Conclusion

By leveraging Logic Apps in conjunction with Microsoft Sentinel and IPDB, organizations can establish a robust security posture capable of swiftly identifying and mitigating potential threats. The seamless integration of these services enable automated threat detection, analysis and response, empowering security teams to stay ahead of evolving cyber threats.

In today’s ever changing threat landscape, proactive security measures are indispensable. With Logic Apps, organizations can harness the power of automation and intelligence to fortify their defenses and safeguard their assets against emerging threats.

Start harnessing the power of Logic Apps today and take proactive steps towards bolstering your security posture in an increasingly interconnected world.